Neural language models (NLMs) have been able to improve machine translation (MT) thanks to their ability to generalize well to long contexts, Our language model, partly inspired by human memory, is built upon the powerful deep learning-based Long Short Term Memory architecture that is capable of learning long-term dependencies. Things that we will learn: basics of RNN, Keras, Q/A Chatbot.

Deep Learning and Recurrent Neural Networks (RNNs) have fueled language modeling research in the past years as it allowed researchers to explore many tasks for which the strong conditional independence assumptions are unrealistic.Deep learning neural networks have shown promising results in problems related to vision, speech and text with varying degrees of success.The analogous neural network for text data is the recurrent neural network (RNN). This kind of network is designed for sequential data and applies the same function to the words or characters of the text. These models are successful in translation (Google Translate), speech recognition and language generation. In this workshop, we will write an RNN in Keras that can 1) classify the intent. 2) Give an appropriate answer.

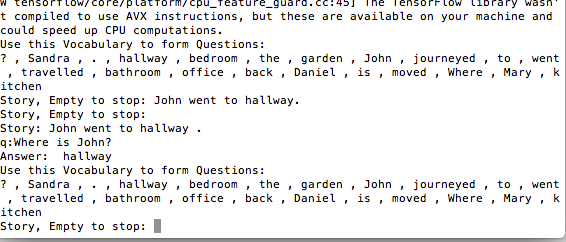

We will also see basic example of word level answering bot using Facebook bAbI dataset .